Abstraction via exemplars? A representational case study on lexical category inference in BERT

Abstract

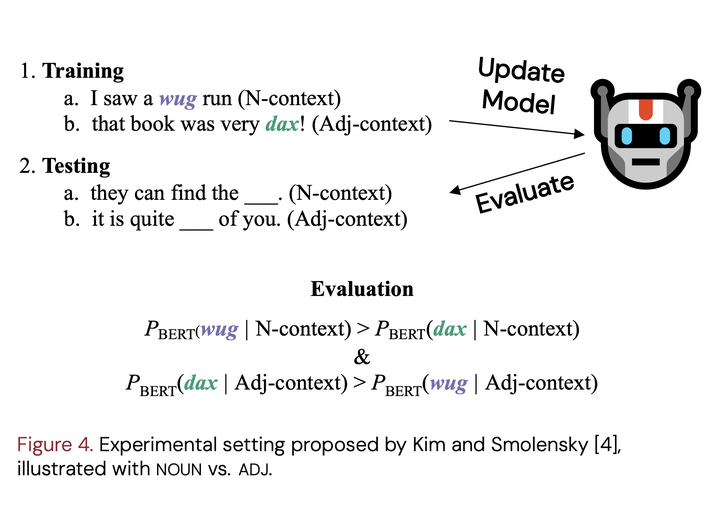

Exemplar based accounts are often considered to be in direct opposition to pure linguistic abstraction in explaining language learners’ ability to generalize to novel expressions. However, the recent success of neural network language models on linguistically-sensitive tasks suggests that perhaps abstractions can arise via the encoding of exemplars. We provide empirical evidence for this claim by adapting an existing experiment that studies how an LM (BERT) generalizes the usage of novel tokens that belong to lexical categories such as Noun/Verb/Adjective/Adverb from exposure to only a single instance of their usage. We analyze the representational behavior of the novel tokens in these experiments, and find that BERT’s capacity to generalize to unseen expressions involving the use of these novel tokens constitutes the movement of novel token representations towards regions of known category exemplars in two-dimensional space. Our results suggest that learners’ encoding of exemplars can indeed give rise to abstraction-like behavior.