Abstract

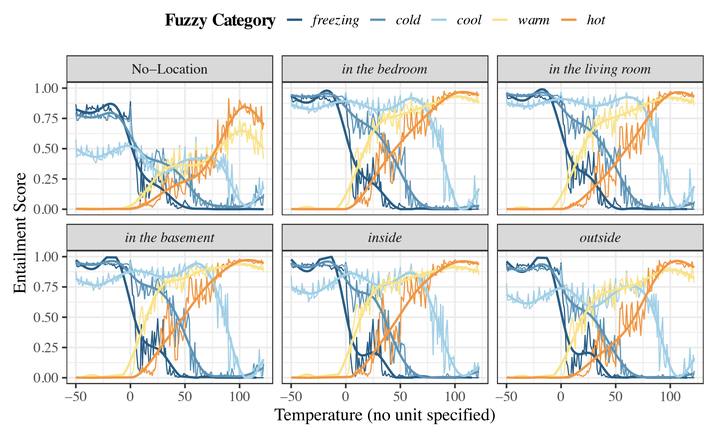

Humans often communicate by using imprecise language, suggesting that fuzzy concepts with unclear boundaries are prevalent in language use. In this paper, we test the extent to which models trained to capture the distributional statistics of language show correspondence to fuzzy-membership patterns. Using the task of natural language inference, we test a recent state of the art model on the classical case of temperature, by examining its mapping of temperature data to fuzzy-perceptions such as cool, hot, etc. We find the model to show patterns that are similar to classical fuzzy-set theoretic formulations of linguistic hedges, albeit with a substantial amount of noise, suggesting that models trained solely on language show promise in encoding fuzziness.